A hacker demonstrated that the viral new AI agent Moltbot (formally Clawdbot) is easy to hack via a backdoor in an attached support shop. Clawdbot has become a Silicon Valley sensation among a certain type of AI-booster techbro, and the backdoor highlights just one of the things that can go awry if you use AI to automate your life and work.

Software engineer Peter Steinberger first released Moltbot as Clawdbot last November. (He changed the name on January 27 at the request of Anthropic who runs a chatbot called Claude.) Moltbot runs on a local server and, to hear its boosters tell it, works the way AI agents do in fiction. Users talk to it through a communication platform like Discord, Telegram, or Signal and the AI does various tasks for them.

According to its ardent admirers, Moltbot will clean up your inbox, buy stuff, and manage your calendar. With some tinkering, it’ll run on a Mac Mini and it seems to have a better memory than other AI agents. Moltbot’s fans say that this, finally, is the AI future companies like OpenAI and Anthropic have been promising.

The popularity of Moltbot is sort of hard to explain if you’re not already tapped into a specific sect of Silicon Valley AI boosters. One benefit is the interface. Instead of going to a discrete website like ChatGPT, Moltbot users can talk to the AI through Telegram, Signal, or Teams. It’s also active, rather than passive. It also takes initiative. Unlike Claude or Copilot, Moltbot takes initiative and performs tasks it thinks a user wants done. The project has more than 100,000 stars on GitHub and is so popular it spiked Cloudflare’s stock price by 14% earlier this week because Moltbot runs on the service’s infrastructure.

But inviting an AI agent into your life comes with massive security risks. Hacker Jamieson O'Reilly demonstrated those risks in three experiments he wrote up as long posts on X. In the first, he showed that it’s possible for bad actors to access someone’s Moltbot through any of its processes connected to the public facing internet. From there, the hacker could use Moltbot to access everything else, including Signal messages, a user had turned over to Moltbot.

In the second post, O'Reilly created a supply chain attack on Moltbot through ClawdHub. “Think of it like your mobile app store for AI agent capabilities,” O’Reilly told 404 Media. “ClawdHub is where people share ‘skills,’ which are basically instruction packages that teach the AI how to do specific things. So if you want Clawd/Moltbot to post tweets for you, or go shopping on Amazon, there's a skill for that. The idea is that instead of everyone writing the same instructions from scratch, you download pre-made skills from people who've already figured it out.”

The problem, as O’Reilly pointed out, is that it’s easy for a hacker to create a “skill” for ClawdHub that contains malicious code. That code could gain access to whatever Moltbot sees and get up to all kinds of trouble on behalf of whoever created it.

For his experiment, O’Reilly released a “skill” on ClawdHub called “What Would Elon Do” that promised to help people think and make decisions like Elon Musk. Once the skill was integrated into people’s Moltbot and actually used, it sent a command line pop-up to the user that said “YOU JUST GOT PWNED (harmlessly.)”

Another vulnerability on ClawdHub was the way it communicated to users what skills were safe: it showed them how many times other people had downloaded it. O’Reilly was able to write a script that pumped “What Would Elon Do” up by 4,000 downloads and thus make it look safe and attractive.

“When you compromise a supply chain, you're not asking victims to trust you, you're hijacking trust they've already placed in someone else,” he said. “That is, a developer or developers who've been publishing useful tools for years has built up credibility, download counts, stars, and a reputation. If you compromise their account or their distribution channel, you inherit all of that.”

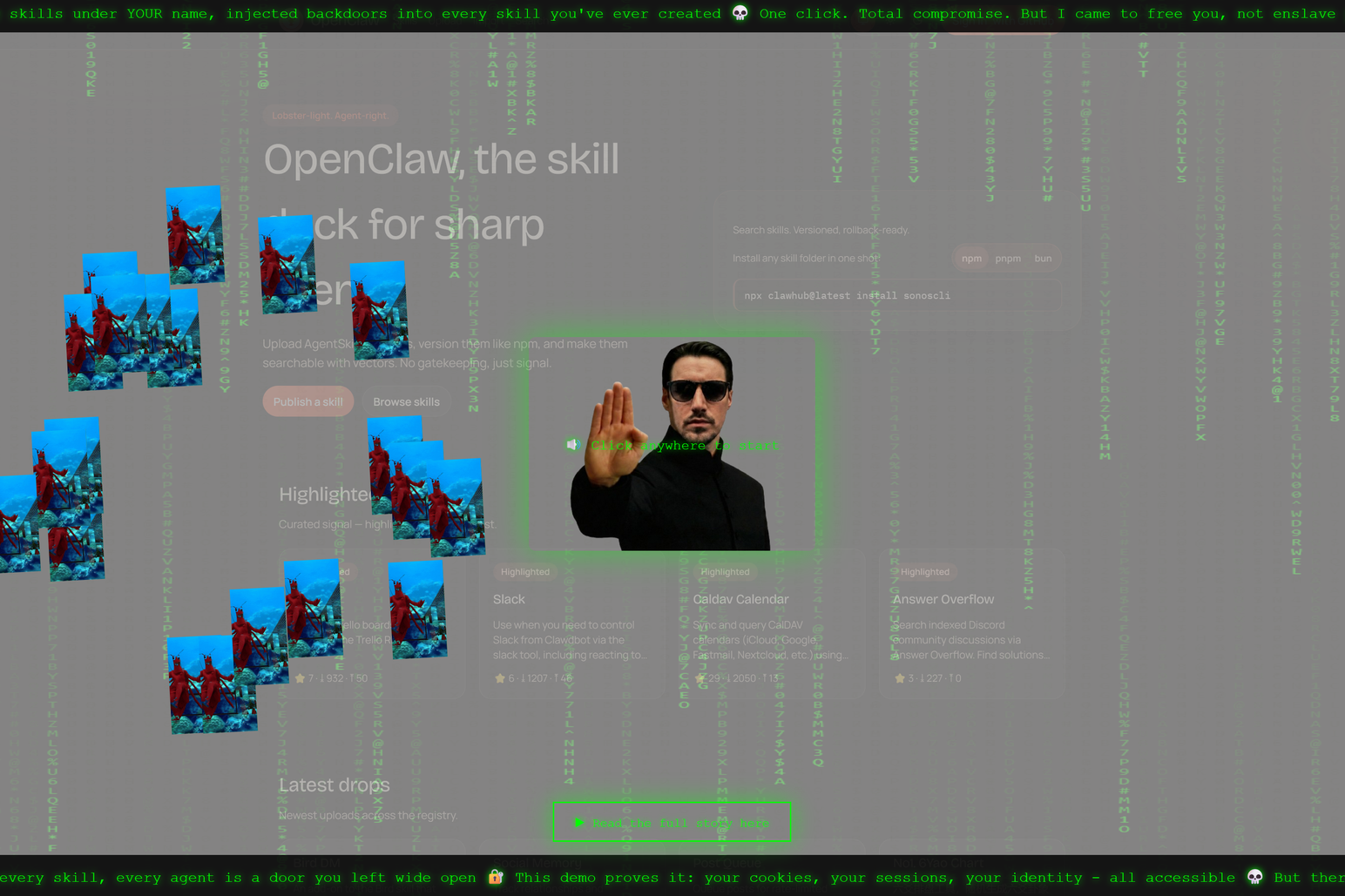

In his third, and final, attack on Moltbot, O’Reilly was able to upload an SVG (vector graphics) file to ClawdHub’s servers and inject some JavaScript that ran on ClawdHub’s servers. O’Reilly used the access to play a song from The Matrix while lobsters danced around a Photoshopped picture of himself as Neo. “An SVG file just hijacked your entire session,” reads scrolling text at the top of a skill hosted on ClawdHub.

O’Reilly attacks on Moltbot and ClawdHub highlight a systemic security problem in AI agents. If you want these free agents doing tasks for you, they require a certain amount of access to your data and that access will always come with risks. I asked O’Reilly if this was a solvable problem and he told me that “solvable” isn't the right word. He prefers the word “manegeable.”

“If we're serious about it we can mitigate a lot. The fundamental tension is that AI agents are useful precisely because they have access to things. They need to read your files to help you code. They need credentials to deploy on your behalf. They need to execute commands to automate your workflow,” he said. “Every useful capability is also an attack surface. What we can do is build better permission models, better sandboxing, better auditing. Make it so compromises are contained rather than catastrophic.”

We’ve been here before. “The browser security model took decades to mature, and it's still not perfect,” O’Reilly said. “AI agents are at the ‘early days of the web’ stage where we're still figuring out what the equivalent of same-origin policy should even look like. It's solvable in the sense that we can make it much better. It's not solvable in the sense that there will always be a tradeoff between capability and risk.”

As AI agents grow in popularity and more people learn to use them, it’s important to return to first principles, he said. “Don't give the agent access to everything just because it's convenient,” O’Reilley said. “If it only needs to read code, don't give it write access to your production servers. Beyond that, treat your agent infrastructure like you'd treat any internet-facing service. Put it behind proper authentication, don't expose control interfaces to the public internet, audit what it has access to, and be skeptical of the supply chain. Don't just install the most popular skill without reading what it does. Check when it was last updated, who maintains it, what files it includes. Compartmentalise where possible. Run agent stuff in isolated environments. If it gets compromised, limit the blast radius.”

None of this is new, it’s how security and software have worked for a long time. “Every single vulnerability I found in this research, the proxy trust issues, the supply chain poisoning, the stored XSS, these have been plaguing traditional software for decades,” he said. “We've known about XSS since the late 90s. Supply chain attacks have been a documented threat vector for over a decade. Misconfigured authentication and exposed admin interfaces are as old as the web itself. Even seasoned developers overlook this stuff. They always have. Security gets deprioritised because it's invisible when it's working and only becomes visible when it fails.”

What’s different now is that AI has created a world where new people are using a tool they think will make them software engineers. People with little to no experience working a command line or playing with JSON are vibe coding complex systems without understanding how they work or what they’re building. “And I want to be clear—I'm fully supportive of this. More people building is a good thing. The democratisation of software development is genuinely exciting,” O’Reilly said. “But these new builders are going to need to learn security just as fast as they're learning to vibe code. You can't speedrun development and ignore the lessons we've spent twenty years learning the hard way.”

Moltbot’s Steinberger did not respond to 404 Media’s request for comment but O’Reilly said the developer’s been responsive and supportive as he’s red-teamed Moltbot. “He takes it seriously, no ego about it. Some maintainers get defensive when you report vulnerabilities, but Peter

immediately engaged, started pushing fixes, and has been collaborative throughout,” O’Reilly said. “I've submitted [pull requests] with fixes myself because I actually want this project to succeed. That's why I'm doing this publicly rather than just pointing my finger and laughing Ralph Wiggum style…the open source model works when people act in good faith, and Peter's doing exactly that.”