Content warning: This story contains graphic images of violence.

A free AI image detector that's been covered in the New York Times and the Wall Street Journal is currently identifying a photograph of what Israel says is a burnt corpse of a baby killed in Hamas’s recent attack on Israel as being generated by AI.

However, the image does not show any signs it was created by AI, according to Hany Farid, a professor at UC Berkeley and one of the world’s leading experts on digitally manipulated images.

The image was first tweeted by Israel’s official Twitter account, as well as the official Twitter account of the country’s Prime Minister Benjamin Netanyahu’s office, after Israel, president Joe Biden, and several media outlets said that 40 Israeli babies were beheaded by Hamas in its attack. No evidence of these alleged beheadings have been made public yet, and that statement has been walked back entirely by the White House, and to a lesser extent, Israel.

The idea that the photograph was AI-generated started after it was tweeted out by conservative Jewish commentator Ben Shapiro on Thursday morning. That afternoon, Jackson Hinkle, who has more than half a million Twitter followers, tweeted a screenshot of Shapiro’s tweet sharing the image, and a screenshot of the free AI image detector tool, AI or Not, made by a company Optic, which determined the image was made by AI. When we ran the image through Optic’s tool ourselves, the tool again said the image is AI generated.

The idea that this image is AI-generated has spread widely on Twitter, and is being used to suggest that official Israeli accounts are spreading AI-generated misinformation.

Farid’s analysis is based on the fact that AI image generators are currently consistently unable to properly replicate reality as it’s captured in the photo.

“One of the things these generators have trouble with is highly structured shapes and straight lines,” Farid told me on a call. “If you see the leg of the table and the screw, that all appears perfectly and that doesn’t typically happen with AI generators.”

See, for example, how the now infamous image in our story about an AI generated image of Spongebob doing 9/11, the twin towers appear to bend and curve because the lines are not straight, or how all the little knobs and buttons in the cockpit blur and jumble together.

The other clear tell that this is not an AI-generated image are the shadows, something that Farid wrote a paper about. Note how a light source from above casts shadows from the man’s fingers, the white sheet, and what appears to be a gurney, all consistent.

“The structural consistencies, the accurate shadows, the lack of artifacts we tend to see in AI— that leads me to believe it’s not even partially AI generated,” Farid said. As to the content of the photograph itself, Farid couldn’t say what we are looking at and when the photograph was taken. “I don’t know what it’s showing. It’s not obvious that’s a person. You would have to talk to a coroner to tell you what this is.”

Farid also ran the image through his own image classifiers trained on a large number of real and AI-generated images, which classify this image as real. This works like other AI image detection tools, Farid said. Four other AI Image detection tools I’ve tried also found that the image is not AI generated.

“In isolation is that going to be the definitive answer? No,” Farid said. “Classifiers are good, they’re part of the tool kit, but they’re just one part of the toolkit. You need to reason about the image in its entirety. When you look at these things, there need to be a series of tests. You can’t just push a button to get an answer. That’s not how it works. It’s not CSI.”

We don’t know why Optic is saying that the photograph is AI generated. Its website claims “AI or Not may produce inaccurate results,” but does not explain how or why any given image may or may not be AI. Optic did not immediately respond to a request for comment.

Farid said these tools are not a good way to determine if an image is real or AI generated, which is why classifiers is only one of a few tools he used to determine the photograph wasn’t generated by an AI.

“Most of these automated tools, automated being the key word here, they ingest a bunch of images that are real, a bunch that are generated, and they train another AI system to learn the difference. They're not very explainable. They're black boxes that come up with an answer. And at their best they operate at 90 percent accuracy. That’s pretty good. But they tend to suffer with out of domain images. If it’s lower resolution, if it doesn’t share properties it was trained on, it’s going to make a lot of mistakes. That’s a classic problem with these models.”

I ran a few other images through Optic’s tool, including the other two images included in the same tweet from Israel’s official account of a dead child and another angle on the burnt corpse, and it determined they are all real. I also ran a few other images from the war, taken by journalists, through various AI image detection tools, with mixed results.

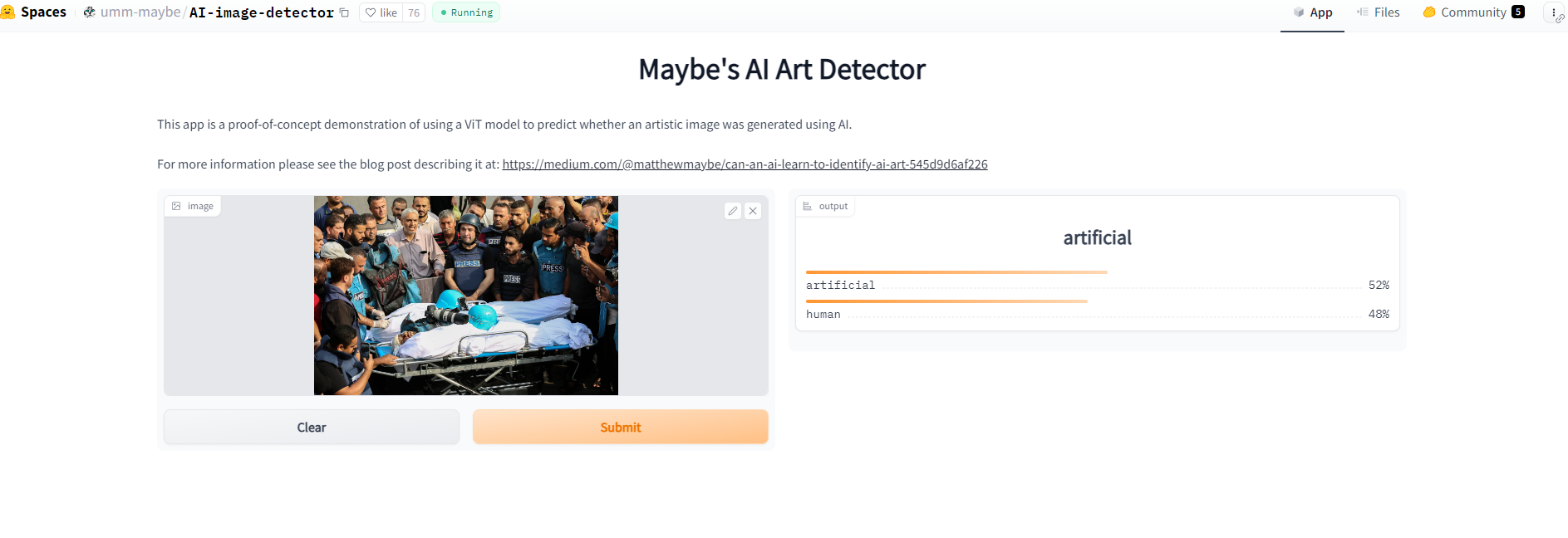

This New York Times photograph, for example, of a Palestinian journalist who was killed by Israel’s relentless bombing of Gaza, was determined to be 52 percent likely to be artificial by an AI image detecting tool hosted on Huggingface and used by some people on 4chan. This is despite the fact that this image is very much real.

The internet is filled with these kinds of tools, and we have no idea how they work exactly, or if they’re accurate. It’s also not clear what we’re supposed to take away from an automated system that spits out a figure that suggests an image is basically about as likely to be AI as it is not. When a black-box system that is at best 90 percent accurate suggests an image is 52 percent likely to be artificial, or 52 percent likely to be real, anyone can use that tool to make any argument they want.

What we know for a fact is that a few days ago Hamas attacked Israel and killed hundreds of people, including children. We know for a fact that Israel is currently bombing a densely populated Gaza, that these bombs kill hundreds of children, and that hundreds more will die as long as the bombing continues.

Facts are important. They are especially important to me because verifying them is how I pay for the food I eat. But I am not sure anyone is going to gain any wisdom about how to stop the bodies from piling up if we find out the children were decapitated, shot, or burned alive. I am also confident that you are not going to find the answer by using unproven AI detection tools. If anything, they are making things worse.

“It’s a second level of disinformation,” Farid said. “There are dozens of these tools out there. Half of them say real, half say fake, there’s not a lot of signal there.”