In November, Google’s AI outfit DeepMind published a press release titled “Millions of new materials discovered with deep learning." But now, researchers who have analyzed a subset of what DeepMind discovered say "we have yet to find any strikingly novel compounds" in that subset.

“AI tool GNoME finds 2.2 million new crystals, including 380,000 stable materials that could power future technologies,” Google wrote of the finding, adding that this was “equivalent to nearly 800 years’ worth of knowledge,” that many of the discoveries “escaped previous human chemical intuition,” and that it was “an order-of-magnitude expansion in stable materials known to humanity.” The paper was published in Nature and was picked up very widely in the press as an example of the incredible promise of AI in science.

Another paper, published at the same time and done by researchers at Lawrence Berkeley National Laboratory “in partnership with Google DeepMind … shows how our AI predictions can be leveraged for autonomous material synthesis,” Google wrote. In this experiment, researchers created an “autonomous laboratory” (A-Lab) that used “computations, historical data from the literature, machine learning, and active learning to plan and interpret the outcomes of experiments performed using robotics.” Essentially, the researchers used AI and robots to remove humans from the laboratory, and came out the other end after 17 days having discovered and synthesized new materials, which the researchers wrote “demonstrates the effectiveness of artificial intelligence-driven platforms for autonomous materials discovery.”

But in the last month, two external groups of researchers that analyzed the DeepMind and Berkeley papers and published their own analyses that at the very least suggest this specific research is being oversold. Everyone in the materials science world that I spoke to stressed that AI holds great promise for discovering new types of materials. But they say Google and its deep learning techniques have not suddenly made an incredible breakthrough in the materials science world.

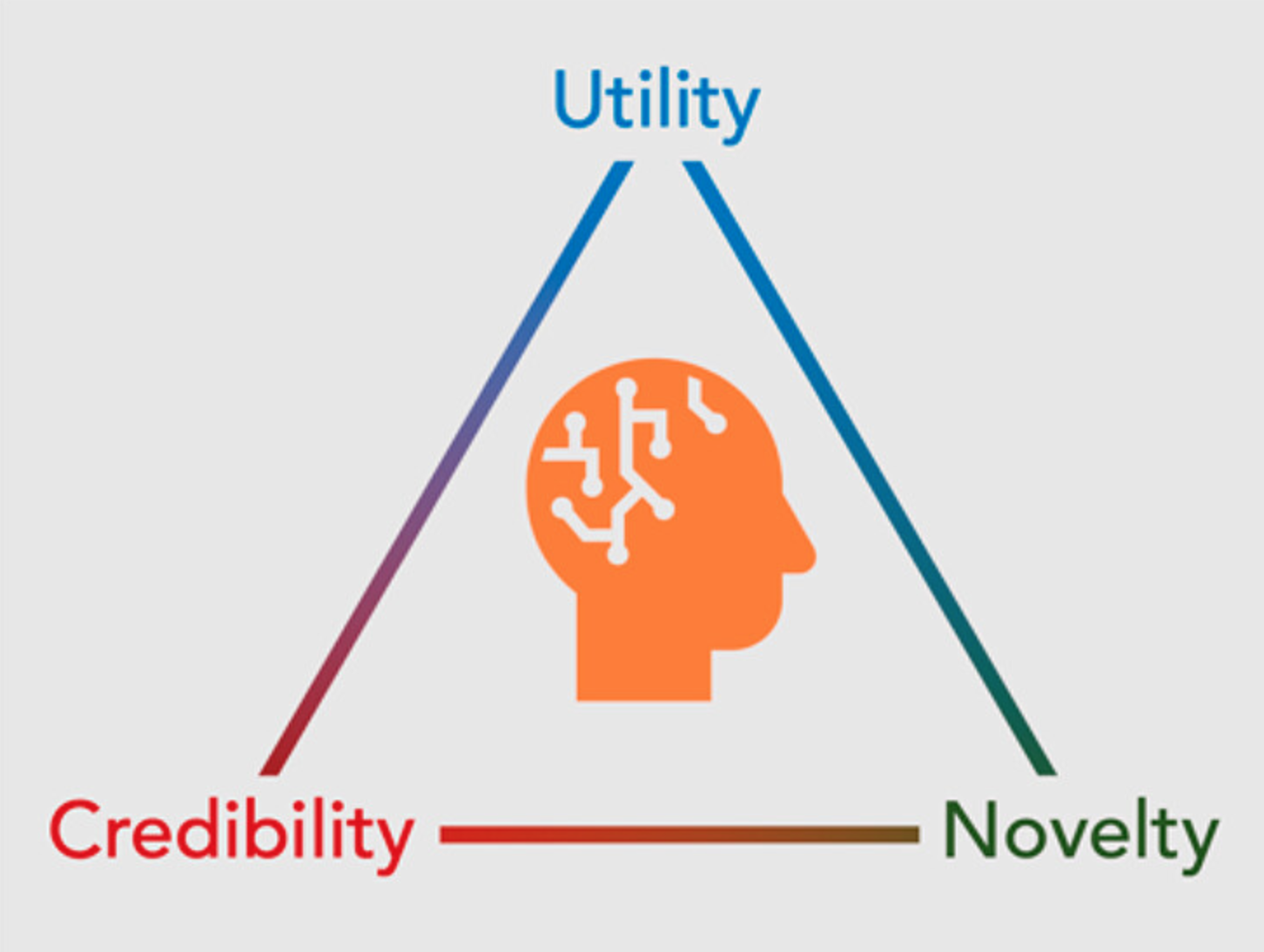

In a perspective paper published in Chemical Materials this week, Anthony Cheetham and Ram Seshadri of the University of California, Santa Barbara selected a random sample of the 380,000 proposed structures released by DeepMind and say that none of them meet a three-part test of whether the proposed material is “credible,” “useful,” and “novel.” They believe that what DeepMind found are “crystalline inorganic compounds and should be described as such, rather than using the more generic label ‘material,’” which they say is a term that should be reserved for things that “demonstrate some utility.”

In the analysis, they write “we have yet to find any strikingly novel compounds in the GNoME and Stable Structure listings, although we anticipate that there must be some among the 384,870 compositions. We also note that, while many of the new compositions are trivial adaptations of known materials, the computational approach delivers credible overall compositions, which gives us confidence that the underlying approach is sound.”

"most of them might be credible, but they’re not very novel because they’re simple derivatives of things that are already known"

In a phone interview, Cheetham told me “the Google paper falls way short in terms of it being a useful, practical contribution to the experimental materials scientists.” Seshadri said “we actually think that Google has missed the mark here.”

“If I was looking for a new material to do a particular function, I wouldn’t comb through more than 2 million new compositions as proposed by Google,” Cheetham said. “I don’t think that’s the best way of going forward. I think the general methodology probably works quite well, but it needs to be a lot more focused around specific needs, so none of us have enough time in our lives to go through 2.2 million possibilities and decide how useful that might be. We spent quite a lot of time on this going through a very small subset of the things that they propose and we realize not only was there no functionality, but most of them might be credible, but they’re not very novel because they’re simple derivatives of things that are already known.”

Google DeepMind told me in a statement, “We stand by all claims made in Google DeepMind’s GNoME paper.”

“Our GNoME research represents orders of magnitude more candidate materials than were previously known to science, and hundreds of the materials we’ve predicted have already been independently synthesized by scientists around the world,” it added. The Materials Project, an open-access material property database, has found Google’s GNoMe database to be top-of-the-line when compared to other machine learning models, and Google said that some of the criticisms in the Chemical Materials analysis, like the fact that many of the new materials have already known structures but use different elements, were done by DeepMind by design.

The Berkeley paper, meanwhile, claimed that an “autonomous laboratory” (called “A-Lab”) took structures proposed by another project called the Materials Project and used a robot to synthesize them with no human intervention and created 43 “novel compounds.” There is one DeepMind researcher on this paper, and Google promoted it in its press release, but Google did not actively do the experiment.

Human researchers analyzing this finding found that it, too, has issues: “We discuss all 43 synthetic products and point out four common shortfalls in the analysis. These errors unfortunately lead to the conclusion that no new materials have been discovered in that work,” the authors, who include Leslie Schoop of Princeton University and Robert Palgrave of University College London, wrote in their analysis.

Again, each of the four researchers I spoke to say that they believe an AI-guided process to finding new materials shows promise, but said that the specific papers they analyzed were not necessarily huge breakthroughs, and that they should not be contextualized as such.

“In the DeepMind paper there are many examples of predicted materials that are clearly nonsensical. Not only to subject experts, but most high school students could say that compounds like H2O11 (which is a Deepmind prediction) do not look right,” Palgrave told me. “There are many many other examples of clearly wrong compounds and Cheetham/Seshadri do a great job of breaking this down more diplomatically than I am doing here. To me it seems that basic quality control has not happened—for the ML to be outputting such compounds as predictions is alarming and to me shows something has gone wrong.”

AI has been used to flood the internet with lots of content that cannot be easily parsed by humans, which makes discovering human-generated, high quality work a challenge. It's an imperfect analogy, but the researchers I spoke to said something similar could happen in materials science as well: Giant databases of potential structures doesn't necessarily make it easier to create something that is going to have a positive impact on society.

“There is some benefit to knowing millions of materials (if accurate) but how do you navigate this space looking for useful materials to make?,” Palgrave said. “Better to have an idea of a few new compounds with exceptionally useful properties than a million where you have no idea which are good.”

Schoop said that there were already “50k unique crystalline inorganic compounds, but we only know the properties of a fraction of these. So it is not very clear to me why we need millions of more compounds if we haven’t yet understood all the ones we do know. It might be much more useful to predict properties of materials than just plainly new materials.”

Again, Google DeepMind says it stands by its paper and takes issues with these characterizations, but it is fair to say that there is now a lot of debate about how AI and machine learning can be used to discover new materials, how these discoveries should be contextualized, tested, and acted upon, and how and whether dumping gigantic databases of proposed structures on the world is actually going to lead to new, tangible breakthroughs for society, or whether it will simply create a lot of noise.

“We don’t think that there’s a problem with AI fundamentally,” Seshadri said. “We think it’s a problem of how you use it. We’re not like, old fashioned people who think these techniques have no place in our science.”