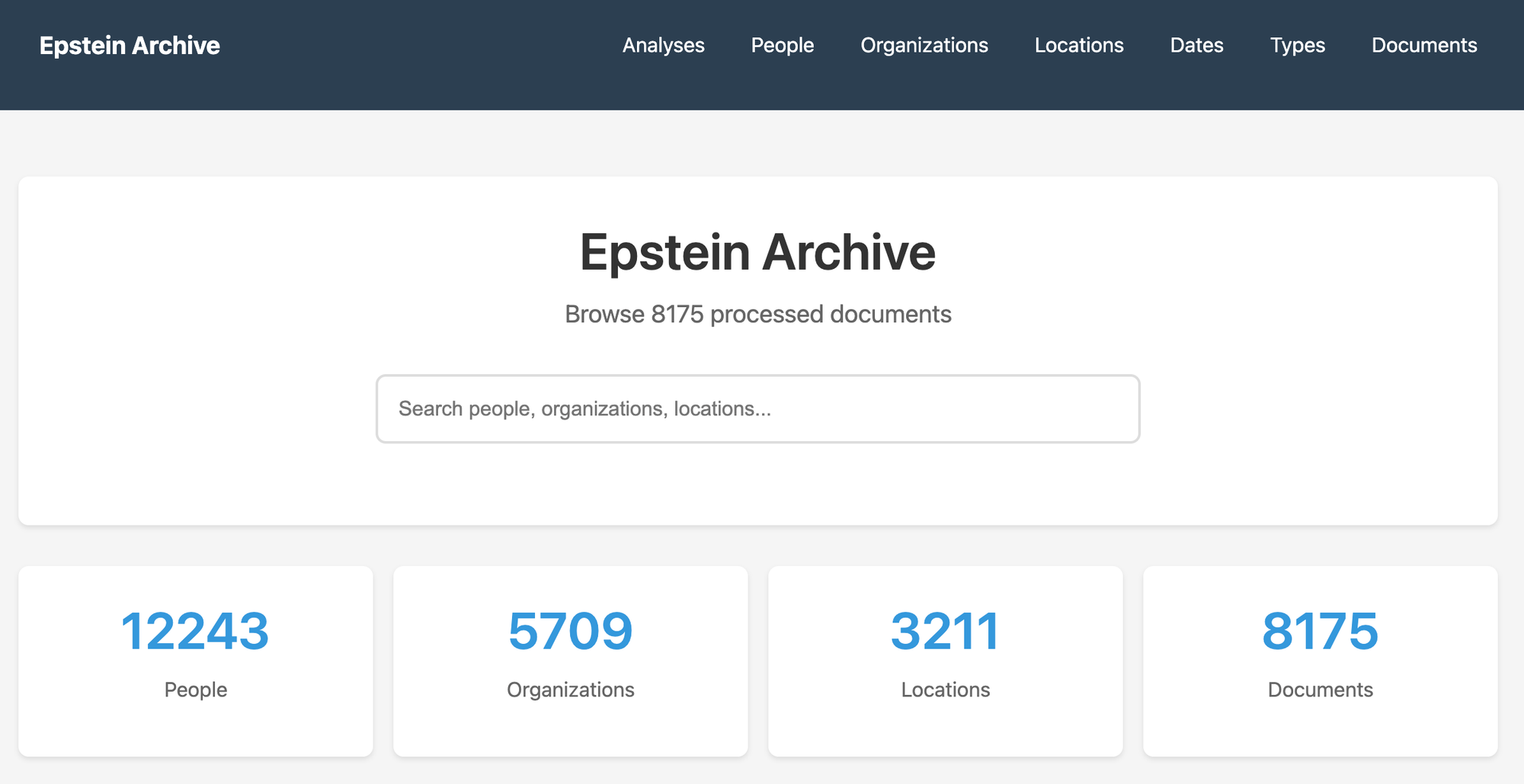

A data hoarder on Reddit used AI to create a searchable database of more than 8,100 files about Jeffrey Epstein released by the House Oversight Committee, making it one of the easiest ways to search through a very messy batch of files.

The project, called Epstein Archive and released on Github, allows people to search the database to find files that mention specific people, organizations, locations, and dates.

Thousands of people are named in the more than 33,295 pages worth of files released by the House Oversight Committee last month as part of its investigation into Epstein. The files, which are partial and redacted (as in, they are not the “full Epstein files”), were subpoenaed by the committee from the Department of Justice. The files were released to the public in an extremely poorly organized Google Drive folder, and were released primarily as jpg and tif images of documents, which are not in any discernible order. Because of this, they have been an absolute nightmare to search.

The Redditor, nicko170, said they had a large language model transcribe, collate, and summarize the documents and built the database to make the files more easily searchable.

“It processes and tries to restore documents into a full document from the mixed pages - some have errored, but will capture them and come back to fix,” they wrote. “Not here to make a buck, just hoping to collate and sort through all these files in an efficient way for everyone.”

On the project’s GitHub, they further explain how it was built:

“This project automatically processes thousands of scanned document pages using AI-powered OCR to:

- Extract and preserve all text (printed and handwritten)

- Identify and index entities (people, organizations, locations, dates)

- Reconstruct multi-page documents from individual scans

- Provide a searchable web interface to explore the archive

This is a public service project. All documents are from public releases. This archive makes them more accessible and searchable.”

The database only features OCR’ed transcripts of the files and not images of the files, though it does tell users the filename so they can go and download the actual document themselves. Like essentially all OCR and LLM projects, there are some errors. Some of the transcripts are gibberish, presumably caused by blurry or illegible type and handwriting on the source documents. But the project represents a pretty good use of AI technology, because the source documents themselves are so messy and were released in such a terrible format that is extremely time consuming to go through them.

The database is indeed pretty usable; I was able to quickly find files that mention Donald Trump (which have already been widely reported on).

To be clear, there are no new files included in this tool, but for people who are looking to explore what has been released in a coherent, straightforward way, this is one of the better options out there. Besides releasing the database and the code for it on Github, they have also turned the entire project into a torrent file, meaning it cannot be easily deleted from the internet.